As Microsoft pushes for businesses to adopt its Microsoft 365 Copilot, many customers have run into a major stumbling block: the AI assistant’s ability to surface confidential information to employees. To deal with the oversharing problem, Microsoft rolled out new tools at its Ignite event last month, including new features in SharePoint Advanced Management and Purview, alongside a blueprint guide to deploying the generative AI (genAI) assistant.

“AI tools like Copilot are an increasing concern for data security professionals due to the amount and nature of data that these tools have access too,” said Jennifer Glenn, research director for IDC’s Security and Trust Group. “Since Microsoft 365 is pervasive in the enterprise, the concerns about Copilot inappropriately accessing or sharing data is a concern I’ve heard often.”

She added that the new data governance and security tools from Microsoft to address oversharing are “essential for enterprises to feel confident in adopting AI tools like Copilot.”

The ability to sift through broad swaths of corporate data and retrieve information for a user is one of M365 Copilot’s strengths. Responses are grounded in the information held across an organization’s M365 environment, such as Word docs, emails, Teams messages, and more. The downside to that feat? Copilot’s language models can also access sensitive files that aren’t locked down.

For some organizations, this has resulted in employees gaining access to confidential data in Copilot’s responses: payroll data, confidential legal files, even top-secret company strategy documents, or any information Copilot can access.

“AI is really good at finding information, and it can surface more information than you would have expected,” said Alex Pozin, director of product marketing at Microsoft, during a session at Ignite. “This is why it’s really important to address oversharing.”

The implication for businesses is that “it can be hard to get started with AI in the first place, because you have to address these problems before you get there,” he said.

“Typically, these issues are a by-product of collaboration,” said Pozin, particularly relating to SharePoint sites and OneDrive.

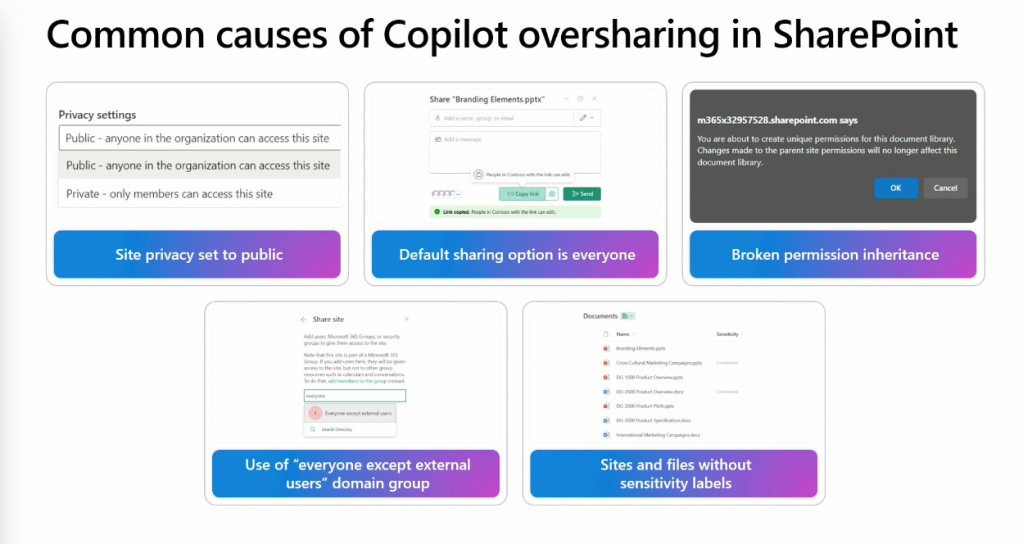

There are a number of reasons Microsoft’s M365 Copilot can “overshare.”

Microsoft

The reasons Copilot can overshare data include site privacy set to “public” rather than “private,” a default file-sharing option that favors access to data by everyone in an organization, and a lack of sensitivity labels, among others. Addressing this is no small task: some companies’ sites contain “millions” of files, said Pozin.

Part of Microsoft’s plan is to expand access to SharePoint Advanced Management (SAM). SAM — introduced as part of SharePoint Premium last year — will be included at no extra cost to M365 Copilot subscriptions starting in early 2025. (M365 Copilot costs $30 per user a month; SharePoint Premium costs $3 per user each month.)

There are also new features for SAM that Microsoft says will provide greater control over access to SharePoint files.

For instance, permission state reports (now generally available) can identify “overshared” SharePoint sites, while site access reviews (also now available) provide a way to ask site owners to address permissions.

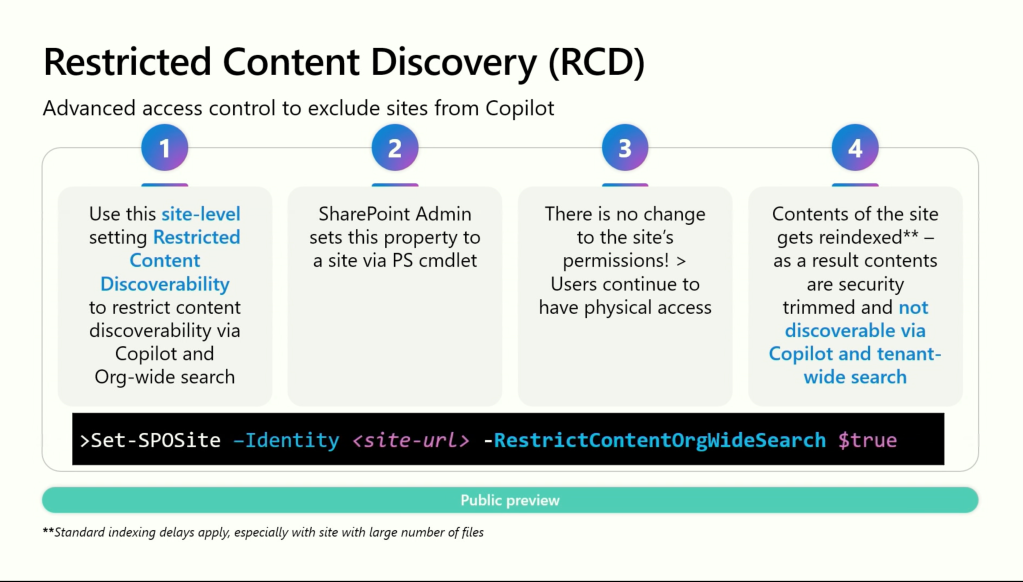

Restricted Content Discovery, which rolls out this month, lets admins prevent Copilot from searching a site and processing content; otherwise, the site will remain unchanged and users can access it as usual. This builds on Restricted Access Control, launched in GA last year, which lets admins take control to restrict site access to site owners only, while also preventing Copilot from summarizing files at the same time (intended as a temporary measure, Microsoft said).

How to restrict Copilot from accessing site data.

Microsoft

“There are situations where you do identify sites that are top secret or kind of “secret sauce” sites where you … want to hide them from Copilot altogether and never risk anyone unintentionally being able to see that content,” said Dave Minasyan, principal product manager, Microsoft, during the Ignite session. “Restricted Content Discovery is the way to do that … you can surgically lock down or hide sites from Copilot reasoning.”

There are also new tools in Purview — Microsoft’s data security and governance software suite that’s available in E5 subscriptions — to identify overshared files that can be accessed by Copilot.

This includes oversharing assessments for M365 Copilot in Data Security Posture Management (DPSM) for AI, which launched in public preview at Ignite. Accessible in the new Purview portal, the oversharing assessments help highlight data that could present a risk by scanning files for sensitive data and identifying repositories such as SharePoint sites where access permissions are applied too broadly. There are also recommendations for how to mitigate oversharing risk, such as adding sensitivity labels or restricting access from SharePoint.

Microsoft Purview Data Loss Prevention for M365 Copilot, also in public preview, lets data security admins create data loss prevention (DLP) policies to exclude certain documents from processing by Copilot based on a file’s sensitivity label. This applies to files held in SharePoint and OneDrive, but can be configured at other levels, such as group, site, and user, to provide more flexibility around who can access what.

Another tool in Purview, Insider Risk Management, can now be used to detect “risky AI usage.” This includes prompts that contain sensitive information and attempts by users to access unauthorized sensitive information. The feature, also in public preview, covers M365 Copilot, Copilot Studio, and ChatGPT Enterprise.

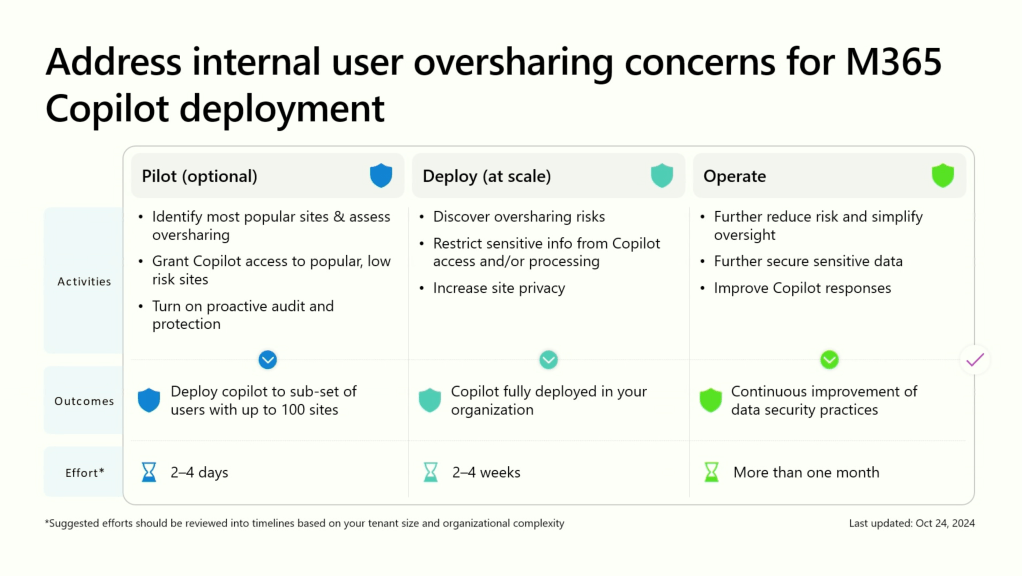

Finally, there’s a new blueprint resource on the Microsoft Learn site that outlines recommended paths to mitigate oversharing during three stages of M365 Copilot rollouts: pilot, deployment, and ongoing operations. There are two blueprints; a more basic “foundational path” and an “optimized path” that uses some of the more advanced data security and governance tools in E5.

Microsoft’s high-level view for deploying M365 Copilot.

Microsoft

“The new built-in M365 Copilot governance controls are long-overdue,” said Jason Wong, distinguished vice president analyst at Gartner. “Immature security controls and oversharing concerns have been top challenges for organizations as they evaluate and implement M365 Copilot in 2024.”

A Gartner survey of 132 IT leaders in June showed that data “oversharing” prompted 40% of respondents to delay M365 Copilot rollouts by three months or more, while 64% claimed information governance and security risks required significant time and resources to deal with during deployments.

Gartner’s survey indicated that businesses are keen to access tools to help manage their data: more than a quarter of respondents upgraded their M365 licenses to include some Microsoft Purview services. The issue isn’t just a problem for Microsoft; a range of third-party software vendors — Syskit and Varonis, for instance — also promise to help organizations manage and secure their data when deploying M365 Copilot.

Wong said customers looking to deploy M365 Copilot need to invest in a range of measures to ensure the AI assistant is rolled out securely — all of which plays into the value generated by M365 Copilot.

“Even with the built-in features, factoring Copilot total cost of ownership and ROI figures should include any additional spending and resource commitment needed for security remediation and ongoing operations, including training employees on safely storing and sharing information,” said Wong.